In the 15 years since I started writing MysteryPollster, pre-election polling has changed a lot, yet when it comes to what we expect from polls, much remains the same. We still assume polls can tell us “what’s going to happen,” when the most clear-cut finding right now is that we just don’t know.

Yes, changing survey methods deserve close scrutiny and warrant caution, but if the primary election results take us by surprise, I’d wager a major culprit will be our long-held tendency to overlook voter uncertainty and assume too much precision in horse-race polling.

Let’s start with what has changed.

In 2004, most state pre-election polls were conducted by landline telephone because nearly all U.S. adults (92%) had a landline phone, and just 7% of voters were cell-phone-only. Today, more than half of Americans (57%) have dropped landlines in favor of mobile phones, and nearly all live-interviewer telephone surveys call both mobile and landline phones.

In 2004, the few media polls conducted online were largely dismissed by mainstream news outlets because those polls typically sampled from “opt-in” panels rather than using more traditional random sampling that could theoretically reach the full population. Today, owing in part to much lower response rates that helped drive up the cost of telephone surveys, election polls are increasingly conducted online, especially at the national level. This past November and December, online surveys accounted for 40 of the 55 national Democratic primary polls compiled by FiveThirtyEight.

Media coverage of polling has also changed, mostly for the better. Fifteen years ago, blogs like mine were as close as we came to a social media conversation about pre-election polls. Few media pollsters discussed their methods outside of academic and professional journals or conferences.

Today, many news sites provide highly sophisticated polling analysis, averages and charts, and many pollsters and academic experts routinely engage in social media discussions of their work.

So the good news is that we have plentiful analysis on how the changes in survey methods might affect their accuracy (one great example is the recently published “Field Guide to Polling: 2020 Edition” from the Pew Research Center).

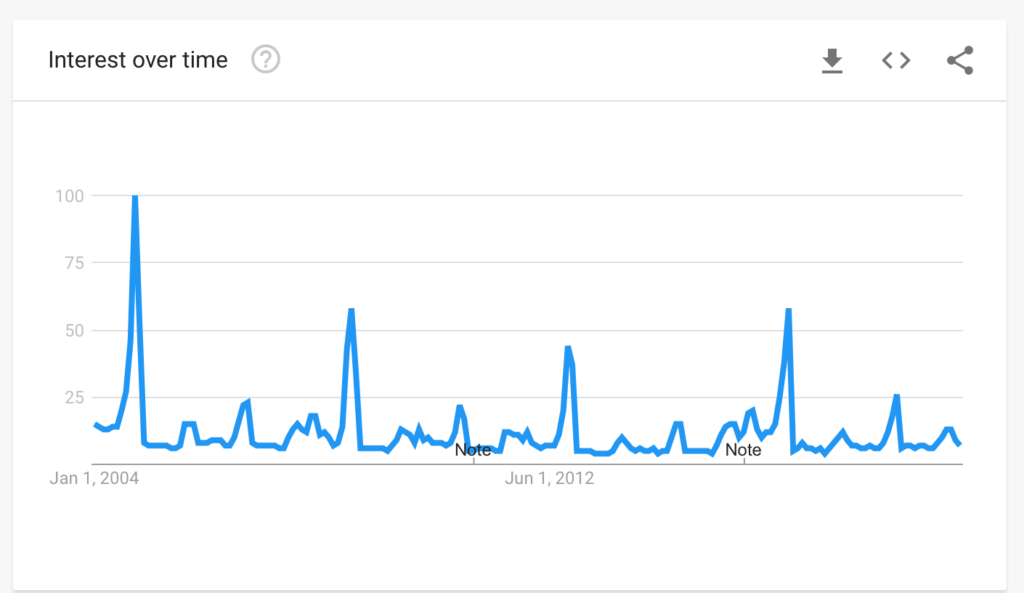

But for all the changes, most of us who follow politics still crave the latest polls, especially around elections, as we always have. Consider the trend in Google searches for the word “poll,” which has shown the same seasonal pattern since 2004. Poll searches spike to their highest levels in October or early November of presidential election years. They also spike, but not quite as high, just before “off-year” general elections and in the period we are in right now: just before the early presidential primaries.

Source: Google Trends

With that craving comes our long ingrained habit of “serially, myopically obsessing over each individual horse-race poll as it’s released,” as my former colleague Ariel Edwards Levy puts it. Since November, headline writers have catered to that appetite by trumpeting poll “surges”for Pete Buttigieg, Joe Biden, Bernie Sanders and just yesterday, Tom Steyer.

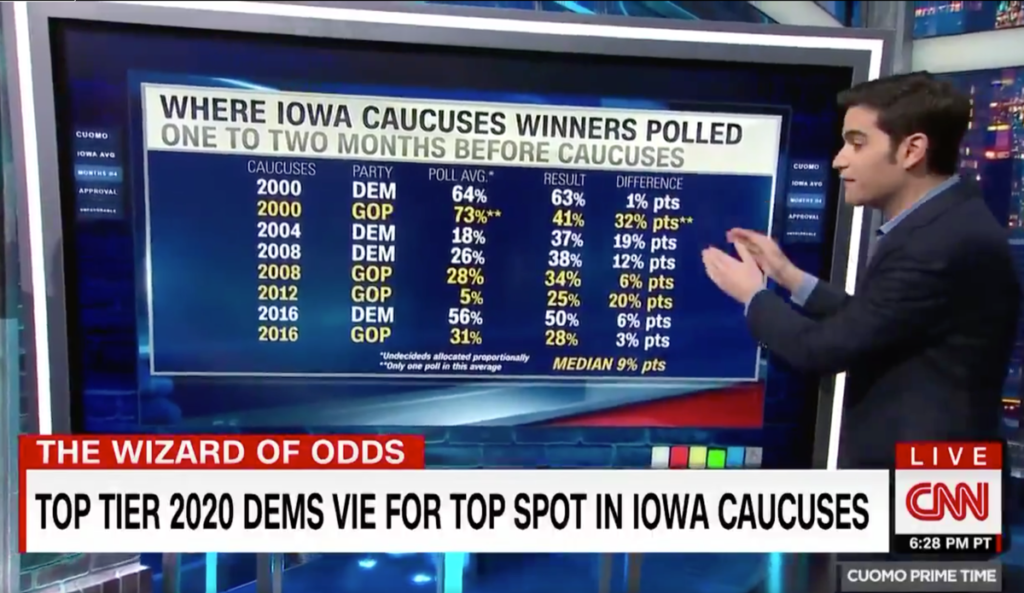

Implicit in all this is the assumption that ample polling can measure the state of the race, “with, like, crazy precision” as Chris Cuomo recently put it on CNN. The problem is that polls have always been imprecise, at best, in predicting the ultimate outcome, especially in a wide-open, multi-candidate primary contest like the one we’re watching now.

In the same CNN segment, Harry Enten explained that the median shift in Iowa for the winner, comparing poll averages a month out to the final result, has been nine percentage points over the last eight contests since 2000. The shifts were as big, according to Enten, as 20 percentage points in 2012 and 32 points in 2000.

source: Twitter.

Iowa is just the beginning, of course, but the early primaries help narrow the field and usually cause voter preferences to change further. Consider the 2004 Democratic primaries, which saw one of the biggest nationwide shifts of the last few decades. Support among Democrats for John Kerry truly surged from the single digits to near 50 percent during January after he unexpectedly won both Iowa and New Hampshire.

Why so much potential volatility? The reason is something plainly evident in the current polling: Democratic voters are having a tough time choosing a favorite from a field of candidates they like. Yes, the vast majority will name one candidate as their first choice when asked to do so by a pollster. But on follow-up questions, massive numbers of the same voters say their minds are not made up:

- Fox News polls released this week found 51% of Democratic caucus-goers in Nevada and 48% of Democratic voters in South Carolina saying they could still change their minds.

- Using a different question format, recent CBS/YouGov polls found just 27% of likely Democratic primary voters in New Hampshire, and just 31% of caucus-goers in Iowa, saying they had “definitely made up my mind” about their first choice.

- A Quinnipiac poll of New Hampshire in November found 61% of likely Democratic primary voters saying their minds “might change” about their first choice before the primary.

- On a national poll in early December, Quinnipiac found 59% of likely Democrats reporting their “minds might change.”

Edwards-Levy has been regularly reporting results like these as “the one number you should be looking at.” She’s right about that. Democratic primary voters “are still very far from settling on their decisions,” she concluded in November. That’s still true now, and probably will be for another few months at least.

Buckle up!

Typos corrected. Photo credit: Miryam León on Unsplash

Hi Mark, Please add me to your email list. Also, you can track our latest UC Berkeley California polls at our web site at https://igs.berkeley.edu/igs-poll/berkeley-igs-poll. We’re releasing a new one today!

All the best,

Mark DiCamillo

Director, Berkeley IGS Poll

This was published recently at Survey Practice. https://www.surveypractice.org/article/11736-sample-size-and-uncertainty-when-predicting-with-polls-the-shortcomings-of-confidence-intervals

Its right in line with what you say here. There are better ways to assess polls than with confidence intervals and key factors like non-response are poorly understood.

Welcome back, Mark. We should talk, and time may be short.