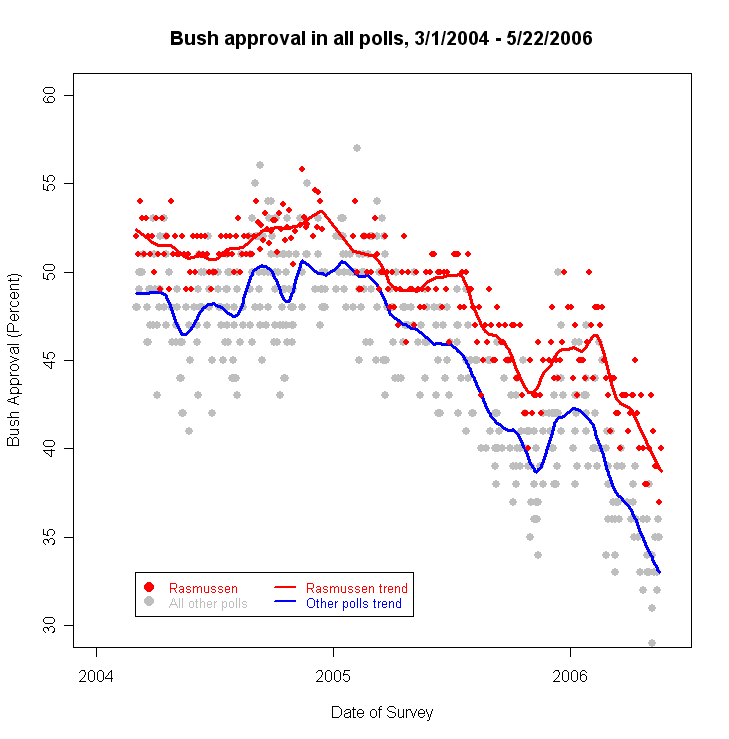

Although still playing catch-up on the “day job,” I want to highlight something that has appeared without fanfare over the last week on Bush job approval page on the Rasmussen Reports website. Rasmussen, as most regular readers know, conducts surveys using an automated methodology that asks respondents to answer questions by pushing buttons on their touch tone telephones. I have looked closely at the Bush job rating as reported by Rasmussen and noted that their surveys report an approval percentage that is consistently 3 to 4 percentage points higher than the results of other national surveys of adults (see the Charles Franklin produced graphic below). Last week, Rasmussen offered this explanation:

When comparing Job Approval ratings between different polling firms, it’s important to focus on trends rather than absolute numbers. One reason for this is that different firms ask Job Approval questions in different ways. At Rasmussen Reports, we ask if people Strongly Approve, Somewhat Approve, Somewhat Disapprove, or Strongly Disapprove of the way the President is performing his job. This approach, in the current political environment, yields results about 3-4 points higher than if we simply ask if people if they approve or disapprove (we have tested this by asking the question both ways on the same night). Presumably, this is because some people who are a bit uncomfortable saying they “Approve” are willing to say they “Somewhat Approve.” It’s worth noting that, with our approach, virtually nobody offers a “Not Sure” response when asked about the President.

Although I had not considered this possibility before, Rasmussen’s finding makes a lot of sense. In my own experience, the word “somewhat” (as in somewhat agree, somewhat favor, someone approve, etc.) softens the choice and makes it more appealing.

It is worth noting that virtually all of the other national public pollsters begin with a question posing the simple two-way choice: “Do you approve or disapprove of the way George W. Bush is handling his job as president?” A few then follow-up with a second question, such as the one used by the ABC News/Washington Post poll: “Do you approve/disapprove strongly or somewhat?” AP-IPSOS uses a similar follow-up, as does LA Times/Bloomberg, Diageo/Hotline and Cook/RT Strategies.

The key point: On the Rasmussen surveys, respondents choose between four categories: strongly approve, somewhat approve, somewhat disapprove and strongly disapprove. On all of the other conventional surveys — including the one listed above that later probe for how strongly respondents approve — respondents initially choose between just two categories: approve and disapprove.** They will only hear the intensity follow-up (strong or somewhat) after answering the first part of the question.

So except for the pollsters (Harris and Zogby) that use entirely different answer categories (excellent, good, fair or poor), Rasmussen is the only national pollster I am aware of that presents an initial choice involving more than “approve” or “disapprove.” As Rasmussen argues, the consistently higher percentage he gets for the Bush job approval rating may well be an artifact of the way he asks the question. If so, the difference in the graph above may be due to what pollsters call “measurement error.”

I hope Scott Rasmussen will consider releasing the data gathered in their experiment that “tested this by asking the question both ways on the same night.” This sort of side-by-side controlled experimentation (where the pollster randomly divides their sample and asks slightly different versions of the same question on each half) is a great example of the scientific approach to questionnaire design and survey analysis. We would all benefit by learning more.

**All of the pollsters mentioned above allow for a volunteered “don’t know’ response in some form, although the procedures that determine how hard live interviewers press uncertain respondents for an answer may vary among polling organizations.

Couldn’t this work both ways? Might there be some people who would feel troubled saying “Disapprove”, but would feel ok about saying “Somewhat Disapprove?” Or is there some psychological reason to think this would only work in the positive direction?

Interesting point Jon. I was thinking it might be some sort of ordering issue. The people in the Rasmussen poll are given in order, strongly approve then somewhat approve before the disapprove options. I would think the psychological instinct would be for someone with a modest degree of doubts about Bush’s performance to reject the first option (strongly approve) and take the second option (somewhat approve). In the case of the other polls, for someone with a modest degree of doubts about Bush’s performance immediately jumps to the second category, disapproval.

Which is more closer to the truth? I would say neither, but they are internally consistent in their errors. (which may be as close as we come to truth in this sphere of being) I would flip-flop and use the disapproval term first in half my sampling. That is, if I was a pollster, not just an interested geek.

Or maybe Rasmussen is a republican pollster who oversamples his base.

Greg in LA has it exactly right. Rasmussen is a Republican. His polls have always overstated Republican support. He should not be taken seriously. (Same thing with Strategic Vision). It’s disappointing that MP gives this guy any exposure at all. He’s a partisan hack.

Interesting. Setting aside, for the moment, the gross use of ad hominem to discredit Rasmussen by Greg and Aaron…

I would love to see, as mdh suggests, Rasmussen do the next set of scientific studies, flip-flopping the order of responses, to see if it induces a different bias. What would it look like? Could it be a mirror image? Would it be identical across both anyway? I can’t imagine this taking any more resources than the other test they conducted. (of course, they may not want to keep spending the resources in that way.)

I actually did something methodologically similar with a multiple choice exam. I gave two sets of exams, with identical questions. The intent was to dissuade casual cheating by having alternating tests. I wanted to keep the tests “the same” as far as difficult, so I simply reversed the order of the questions. Interestingly the means for the two tests were different. Curiousity got the better of me though, so I then took what amounted to two different samples, and did a test of means. Outcome? There was a statistically significant difference?

What could have caused it? It appeared that the lower scores were on the tests that started with the (marginally) more difficult questions. Could this be a psychological impact on the students? I don’t know. I may continue this “study” further (unless, of course, someone can point me to studies that have already done this!)

Back to the ad hominem: Come on, guys… think for a minute, why would one want to intentionally deceive oneself, or one’s clients? If one wants to win, one should work hard to know the “real” opinions, if one can find them… I don’t discredit Mark simply because he is an admitted democratic pollster.

Steve

Um, thanks Steve! 🙂

For the record, I’ve got a new post up this morning that responds directly to the questions from John Willets.

Steve, I never said it was intentional. It’s human nature that people who are biased tend to make biased assumptions in their turnout models, etc. I think that’s the case with both Rasmussen and Strategic Vision. Remember, Rasmussen Reports isn’t his first attempt at a polling outfit. Before that he had Portrait of America which had Bush beating Gore by 8 points in their final poll. Rasmussen shut that outfit down because it was so discredited. Beyond that, credible pollsters do not endorse his auto-methodology.

There’s simply no reason to consider Rasmussen a serious pollster worthy of serious discussion.