First, my apologies for not posting yesterday. The Inauguration was also a federal holiday, which meant no child care in my household and a day of being Mystery Daddy not Mystery Pollster.

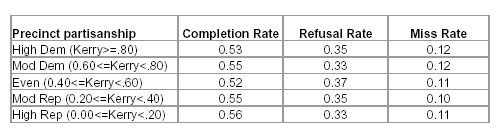

So without further ado: Though the Edison/Mitofsky Report gives us much to chew over, the table drawing the most attention is the one on page 37 that shows completion rates for the survey by the level of partisanship of the precinct.

A bit of help for those who may be confused: Each number is a percentage and you read across. Each row shows completion, refusal and miss rates for various categories of precincts, categorized by their level of partisanship. The first row shows that in precincts that gave 80% or more of their vote to John Kerry, 53% of voters approached by interviewers agreed to be interviewed, 35% refused and another 12% should have been approached but were missed by the interviewers.

Observers on both sides of the political spectrum have concluded that this table refutes the central argument of the report, namely that the discrepancy in Kerry’s favor was “most likely due to Kerry voters participating in the exit polls at a higher rate than Bush voters” (p. 3). Jonathan Simon, who has argued that the exit poll discrepancies are evidence of an inaccurate vote count, concluded in an email this morning that the above table “effectively refutes the Reluctant Bush Responder (aka differential response) hypothesis, and leaves no plausible exit poll-based explanation for the exit poll-vote count discrepancies.” From the opposite end of the spectrum, Gerry Dales, editor of the blog DalyThoughts argues [in a comment here] uses the table to “dismiss the notion that the report has proved…differential non-response as a primary source of the statistical bias” (emphasis added). He suspects malfeasance by the interviewers rather than fraud in the count.

The table certainly challenges the idea that Kerry voters participated at a higher rate than Bush voters, but I am not sure it refutes it. Here’s why:

The table shows response rates for precincts not voters. Unfortunately, we have no way to tabulate response rates for individuals because we have no data for those who refused or were missed. Simon and Dales are certainly both right about one thing: If completion rates were uniformly higher for Kerry voters than Bush across all precincts, the completion rates should be higher in precincts voting heavily for Kerry than in those voting heavily for Bush. If anything, the table above shows slightly higher completion rates look slightly higher in the Republican precincts.

However, the difference in completion rates need not be uniform across all types of precincts. Mathematically, an overall difference in completion rates will be consistent with the pattern in the table above if you assume that Bush voter completion rates tended to be higher where the percentage of Kerry voters in the precincts was lower, or that Kerry voter completion rates tended to be higher where the percentage of Bush voters in the precincts was lower, or both. I am not arguing that this is likely, only that it is possible.

Note also that the two extreme precinct categories are by far the smallest (see the table at the bottom of p. 36): Only 40 precincts of 1250 (3%) were “High Rep” and only 90 were “High Dem” (7%). More than three quarters were in the “Even” (43%) or “Mod Rep” (33%) categories. Not that this explains the lack of a pattern – it just suggests that the extreme precincts may not be representative of most voters.

Second, as Gerry seems to anticipate in his comments yesterday, the completion rate statistics are only as good as the interviewers that compiled them. Interviewers were responsible for counting each voter they missed or that refused to be interviewed and keeping tallies on their race, gender and approximate age. The report presents overwhelming evidence that errors were higher for interviewers with less experience. One hypothesis might be that some interviewers made improper substitutions without recording refusals appropriately.

Consider my hypothetical in the last post: A husband is selected as the nth voter but refuses. His spouse offers to complete the survey instead. The interviewer breaks with the proscribed procedure and allows the spouse to do the interview (rather than waiting for the next nth voter). [Note: this is actually not a hypothetical – I exchanged email with a professor who reported this example after debriefed students he helped recruit as NEP interviewers]. Question is: would the interviewer record the husband as a refusal? The point is that the same sloppiness that allows an eager respondent to volunteer (something that is impossible, by the way, on a telephone survey) might also skew the completion rate tallies. Presumably, that is one reason why Edison/Mitofsky still plans to conduct “in-depth” interviews with interviewers in Ohio and Pennsylvania (p. 13) – they want to understand more about what interviews did and did not do.

Third, there is the possibility that some Bush voters chose to lie to the exit pollsters. Any such behavior would have no impact on the completion rate statistics. So why would a loyal Bush voter want to do that? Here’s what one MP reader told me via email. As Dave Barry used to say, I am not making this up:

Most who are pissed off about the exit polls are Democrats or Kerry supporters. Such people are unlikely to appreciate how profoundly some Republicans have come to despise the mainstream media, just since 2000. You have the war, which is a big one. To those who support Bush much of the press have been seditious. So, if you carry around a high degree of patriotism you are likely to have blood in your eye about coverage of the Iraq war, alone. Sean Hannity and Rush Limbaugh had media in their sights every day leading up to the 2004 election, and scored a tremendous climax with the Rather fraud and NY Times late-hit attempt. I was prepared to lie to the exit pollster if any presented himself. In fact, however, I can’t be sure I would have, and might have just said “none of your f—ing business.” We can’t know because it didn’t happen, but I do know the idea to lie to them was definitely in my mind.

Having said all that, I think Gerry Dales has a point about the potential for interviewer bias (Noam Scheiber raised a similar issue back in November, and in retrospect, I was too dismissive). The interviewers included many college students and holders of advanced degrees who were able to work for a day as an exit pollster. Many were recruited by college professors or (less often) on “Craigslist.com.” It’s not a stretch to assume that the interviewers were, on average, more likely to be Kerry voters than Bush voters.

My only difference with Gerry on this point is that such bias need not be conscious or intentional. Fifty years or more of academic research on interviewer effects shows that when the majority of interviewers share a point of view, the survey results often show a bias toward that point of view. Often the reasons are elusive and presumed to be subconscious.

Correction: While academic survey methodologists have studied interviewer effects for at least 50 years, their findings have been inconsistent regarding correlations between interviewer and respondent attitudes. I would have done better to cite the conventional wisdom among political pollsters that the use of volunteer partisans as interviewers — even when properly trained and supervised — will often bias the results in favor of the sponsoring party or client. We presume the reasons for that bias are subsconscious and unintentional.

In this case, the problem may have been as innocent as an inexperienced interviewer feeling too intimidated to follow the procedure and approach every “nth” voter and occasionally skipping over those who “looked” hostile, choosing instead those who seemed more friendly and approachable.

One last thought: So many who are considering the exit poll problem yearn for simple, tidy answers that can be easily proved or dismissed: It was fraud! It was incompetence! Someone is lying! Unfortunately, this is one of those problems for which simple answers are elusive. The Edison/Mitofsky report provides a lot of data showing precinct level characteristics that seem to correlate with Kerry bias. These data make a compelling case that whatever the underlying problem (or problems), they were made worse by young, inexperienced or poorly trained interviewers especially when up against logistical obstacles to completing an interview. It also makes clear (for those who missed it) that these problems have occurred before (pp. 32-35), especially in 1992 when voter turnout and voter interest were at similarly high levels (p. 35).

Many more good questions, never enough time. Hopefully, another posting later tonight… if not, have a great weekend!

Any chance of correlation with other races in a precinct? Certainly too hard to do nationwide, but maybe in selected precincts. If there is accuracy for the exit polls in statewide races or Congressional races but inaccuracy for the presidential race then this points to a vote counting issue. If not then this proves nothing one way or another. Ohio and Florida both were electing Senators.

Good post Mark. Your patience and efforts to explain this are admirable.

RE: The hypothesis that Bish voters would have lied.

My father-in-law is a ret. USMC Lt. Col. and absolute Kerry Hater since the early 1960s. He told me one night in October that he was polled by Gallup. I’d never heard of someone actually getting called so it piqued my interest. He told them that he would vote for Kerry because the polls are “biased” anyhow and he wanted to keep them guessing.

I don’t understand the logic, but I certainly know MANY who despise MSM and anything other than that which is hosted by Fox News, Rush, or other established conservative outlet.

Also, the part about the report that I thought was interesting was the mixed precinct-polling stations. I poll-watched 3 precincts on election day. Each precinct was grouped with 3 other polling stations.

At each of the polling stations I visited, if an exit pollster was required to stand outside the building, they would have no idea which precinct the exiting voter actually voted in.

Sorry.. “Each precinct was grouped with 3 other polling stations.”

That should read, “Each of my precincts were grouped with 2 other precincts in the same polling station.”

Letter To Mystery Pollster

Mystery Pollster Mark Blumenthal tickled my mailbox with a note alerting me that he had posted a followup to the Mitofsky report. Since his post had made note of several things I have brought up, sometimes in agreement, sometimes in disagreement, and s…

Note that the highest refusal rate is in the “neutral” precincts. Is it possible that someone who voted for the “wrong candidate” would be likely to refuse to complete a form while their buddy/spouse who voted for the right candidate waited?

Here’s an interesting and relevant anecdote.

http://www.democraticunderground.com/discuss/duboard.php?az=show_mesg&forum=203&topic_id=97506&mesg_id=97575&page=

Dag nabbit! Sorry, it should be “BUsh voters” and “since the early 1970s.” Geez.

ftr – john conyers has written to mitofsky and rosin again, looking for the raw data. the letter is here:

http://www.freepress.org/departments/display/19/2005/1112

i havent had a chance to fully analyse the report yet – but did notice a couple of things that people might consider when looking at the WPE numbers:

1) the stats about the interviewers are incomplete – the report (somewhat confusingly) describes why n=1250 (rather than 1480), however for many of the remaining questions, n is approx 1100, and 1118, and 1127, and 1180, and 1240 etc – so just keep an eye out for that. if they cant even interview their own interviewers properly then…

2) keep an eye on the sub-sample n sizes for each issue the report discusses – sometimes the report draws apparent conclusions based on small numbers

3) similarly, be wary of making simplistic logic ‘shortcuts’ – for example, one of the memes which seems to be catching fire is that ‘smart people had higher error rates (presumed bias)’ – although the data doesnt necessarily say that – its true that the ‘advanced degree’ had the highest mean WPE, but it isnt significantly higher than ‘1-3 years college’ – and if you look at MedianWPE, the ‘smartest’ category has the 2nd lowest error, *much* better than ‘1-3 years college’ (5.2 vs 7.0)

4) also, be aware that some of the statements made in the report are somewhat misleading – for example, “The completion rates tend to be slightly higher in precincts with more educated interviewers”.

given that the ‘1-3 yr’ category included *half* the interviewers, and they only got 53% completion, the AdvGrads’ 60% completion rate seems ‘a lot’ higher.

the above points arent designed to make any particular point – other than we need to be careful when looking at the numbers (of course) and be wary of some of the apparent conclusions.

separately, to MP’s “Reluctant Bush Responder” point, it ought be noted that the nsize of ‘Mod Rep’ category is 250% larger than the ‘Mod Dem’ category, which is curious in itself, and could dwarf any of the other issues.

cheers.

rick – i think that you said somewhere that early polls are often inaccurate (i cant lay my hands on the quote at the mo) – no surprises there given that sample size increases throughout the day. on p19&20 the report seems to show that things got much worse throughout the day (with the geo and composite estimates) – the report actually seems to try to hide the fact.

incredibly, the number of t>1 states actually grew by 80% between call1 and call3 (composite/pres). does anyone have any insight/speculation as to how this can happen?

Convincing Evidence That the Exit Poll Discrepancies Were Caused by Bush Supporters Being Less Willing Than Kerry Supporters to Fill Out Exit Poll Questionnaires?

Edison/Mitofsky today publicly released a 77 page report on what went wrong in their Election 2004 exit polls.

I believe it’s worth estimating explicitly how big the differential response rates would have to be in order to explain all of the within precinct errors. The Edison/Mitofsky report doesn’t do this explicitly, but the data it gives on pages 36 & 37 of its report suffices for a calculation under the mild additional assumption that third party voting was negligible.

The simple algebra is as follows. (Before continuing, please note that this argument with additional commentary and nifty HTML tables can be found on my blog at http://williamkaminsky.typepad.com/too_many_worlds/2005/01/direct_evidence.html ):

Let K = Kerry’s vote percentage. Thus, 1-K is assumed to be Bush’s vote percentage. Let c = the mean completion rate for all voters, and W = the mean within precinct error. We seek to solve for b = the completion rate for Bush voters and k = the completion rate for Kerry voters. Thus, we solve this pair of equations

c = b(1-K) + kK

W = [b(1-K) – kK]/c – (1 – 2K)

[Justification: The first equation says the mean total completion rate c has to be the average of the mean Bush and Kerry completion rates, b and k, weighted by the respective Bush and Kerry votes, 1-K and K. The second equation says the mean within precinct error W is the exit poll (Kerry-Bush) vote margin [b(1-K) – kK]/c minus the official (Kerry-Bush) vote margin (1 – 2K).]

After some simple algebra, these equations yield

b = c + cW / [2(1-K)]

k = c – cW/(2K)

Thus, using the above quoted data from the Edison/Mitofsky Report and taking K to be the midpoint of the Kerry vote intervals defining the precinct partisanship categories, I thus produce the following estimates:

Precinct Partisanship (K)

Mean WPE (W)

Measured Total Response Rate (c)

Estimated Kerry Response Rate (k)

Estimated Bush Response Rate (b)

Highly Democratic:

K = 90%

W = 0.3%

c = 53%

k = 53%

b = 54%

Estimated Kerry-Bush diff response rate that accounts for all of W = -1 percentage point

Moderately Democratic

K = 70%

W = -5.9%

c = 55%

k = 57%

b = 50%

Estimated Kerry-Bush diff response rate that accounts for all of W = 7 percentage points

Even

K = 50%

W = -8.5%

c = 52%

k = 56%

b = 48%

Estimated Kerry-Bush diff response rate that accounts for all of W = 8 percentage points

Moderately Republican

K = 30%

W = -6.1%

c = 55%

k = 61%

b = 53%

Estimated Kerry-bush diff response rate that accounts for all of W = 8 percentage points

Highly Republican

K = 10%

W = -10.0%

c = 56%

k = 84% [ (!) Yes, 84% ]

b = 53%

Estimated Kerry-Bush diff response rate that accounts for all of W = 31 percentage points (!)

The differential response in the moderately Democratic, even, and moderately Republican precincts is 7 or 8 percentage points more for Kerry voters than Bush voters, which prima facie does not seem very large at all, and thus for these precincts the hypothesis of differential response seems like a pretty plausible one to account for the entirety of the exit poll discrepancies. (Honestly, though, what actual data does anyone really have to assess whether a differential response rate is in fact unreasonably large or believeably small? Again, this data can’t prove the differential response hypothesis beyond reasonable doubt. It’s just the necessary differential response rates to fully account for the exit poll discrepancies in light of this within-precinct-error data aren’t so large in the vast majority of precincts—the moderately Democrat, even, and moderately Republican ones—that more reasonable doubts are added.) On the other hand, the differential response in highly Republican precincts does seem, prima facie, to be implausibly huge: 31 percentage points if we assume K = 10% or 16 percentage points if we make the most conservative possible assumption that K = 20% (the very edge of the Edison/Mitofsky definition of "highly Republican"). Thus, for these precincts, the hypothesis of differential response seems, prima facie, pretty dubious as a complete explanation of the exit poll discrepancies.

Mark – another thing when considering the “Reluctant Bush Responder” issue is that even though we see the average WPE of -6.5, we have to remember that in fully one quarter of precincts, there was WPE>5 for Bush. (p34)

surprisingly, only 25% of precincts were within plus or minus 5 percentage points.

in fact, if you look at the table on p42, an astonishing 28% of precincts actually had WPE 15″ is higher than “WPE > -15” – and the same pattern exists throughout the table.

that all seems to refute the Reluctant Repub hypothesis.

if anyone has any insight to explain how 28% of precincts actually had WPE <-15, id love to hear.

luke,

you do realize each precinct is a very small sample..and therefore would have a large random sampling error associated with it..thats why only 25% of precincts are within

5%

Another result undermining the Reluctant Bushie theory is that precincts in small town and rural areas (<50K population) showed smaller WPEs. These areas are generally heavily Republican, and moreover are the focus of the post-election parable that Bush was put over the top by an upswelling of Christians in rural/exurban areas.

Rural areas (296 precincts) had a WPE of -3.6, compared to -7.9 for cities >500K, -8.5 for cities between 50K and 100K.

Small towns (10K to 50K) similarly had a lower WPE, -4.9 (126 precincts) than the larger towns and cities.

So again, why would these heavy Republican areas be the more accurate, if Republicans per se refused at higher rates?

brian – thanks for the reminder. do we know how many interviews there were per precinct? we’d still hope to see something approximating a normal distribution tho.

ftr – ive posted a chart at

http://wotisitgood4.blogspot.com/2005/01/exit-poll-reluctant-bush-responder.html which maps the refusal rate vs state (with the states sorted by ‘most blue’ to ‘most red’. the graph shows refusal rates trending down as you move ‘redder’ – thus it seems to further undermine the ‘reluctant bush responder’ theory (altho its possible to contrive other explanations)

apologies for the frequent posting…

just a general comment (with 2 parts):

a) even if we take the reports’ main conclusions as given – that there is a problem with the weather, or distance from the polling site, or interviewer education, or interviewer age, or the interviewing rate et al – it seems that we are inevitably destined for reductio ad absurdum – and the next time the exitpolls are ‘wrong’, the purported solution will appear as a perfect national blend of gender/age/race/education – and when that doesnt work, we’ll hold out for a solution where we try to map those same elements by state, and then by precinct. and when that doesnt work, we’ll look at the demographic spread of the recruiters in an attempt to stamp out bias at that level. and so on. and if that doesnt work, we’ll find some other seemingly random, contrived statistic that fits the purported narrative such as ‘when people pay attention to elections, the WPE increases by order of magnitude – and we have a single data point to prove it’. do others get the same sense? it all just seems kinda futile. which brings me to my next point…

b) (trying not to sound flippant) if we consider exitpolls generally, in the ukraine and elsewhere, they tend to be used as indicators of fraud or otherwise (and i appreciate that the nov2 exitpolls were specifically designed for purposes other than to identify fraud) – but arent they also subject to the same considerations – distrust of the media, age/edu/gender/source of interviewers, weather, distance from polls etc?

Freeman responds to critics:

http://nashuaadvocate.blogspot.com/2005/01/news-statisticians-under-fire-for.html

and

http://alternet.org/story/21036/

Parsing the Edison/Mitofsky Report

Edison/Mitofsky has made public its long awaited AAR on the exit poll fiasco. The definitive responses so far have come from the two most respected voices on this subject: the meticulous Marc Blumenthal, and the indefatigable Gerry Daly. I’ll take…

Mark,

I don’t know if you have mentioned this in previous posts but I think another data point is being ignored in all this. Especially in key states like Florida and Ohio, there were reports of voters in Democratic precincts having to wait much longer than those in Republican precincts. I particularly remember reading about ultra-long waits in Ohio for Democratic voters. Many voters simply lost patience and left. When people are fed up with waiting or are exhausted after having waited for hours to vote, it is hard for me to imagine that they would be willing to spend more time talking to exit pollsters after they voted. That is yet another reason why I find this theory of the “Reluctant Bush Responder” less than sound.

A quick note to Jeff Hartley about the Democratic Underground thread he linked to: I hadn’t seen the thread before, but as a New Hampshire voter on November 2nd, 2004 (I voted at the Ledge Street Elementary School here in Nashua) I can say this — there is no precinct in New Hampshire, let alone in Derry, in which “2500” or even “2000” people passed through during the course of a single hour. Keep in mind the whole state had 671,000 voters on Election Day. By this New Hampshirite’s calculation, what, maybe 25,000 voters (4% of the entire state) were assigned to and voted in that particular precinct? Impossible. I live in the second-largest city in the state, I voted downtown, and despite voting at around noon I believe I was something like the 520th voter to cast a ballot (according to the lone optical-scan machine being used in the precinct). There was *literally* no wait to vote, and despite it being around lunchtime (maybe just a tad earlier) there were only 20-25 voters in the entire area (both inside the building and coming in from outside) during the ten minutes or more I was there. So the notion that in *Derry*, a suburb of Manchester, there were 2000+ people actually voting during the course of a single hour in a *single precinct*…no way. Even in Ohio, inner-city precincts were limited to around 1,500 *total registered voters* in each precinct.

So, I’m sorry, but that observation is bunk. I’d be stunned if *100* people voted in that Derry precinct over the course of an hour.

The News Editor

The Nashua Advocate

Bill — The remarkable 31% differential becomes a less remarkable 14-ish if we use the median WPE for that bracket (rather than the mean) and guesstimate a representative K within that bracket in the .87 ballpark (reasonable or even generous since the bracket is a tail of a distributions, and using .90 would treat it as uniformly distributed from .80 to 1.00).

Also note that algebra on the aggregates may not be a poor guide to algebra on the instances, especially as (k – b) goes hyperbolic in K.

The real surprise is that all guesstimated cases in this bracket require extraordinary respone rates by Kerry voters — which is not at all predicted by the “Bush voters hate network-logo’d kids with clipboards” version of the differential response hypothesis.

Exit Poll Study

Mystery Pollster reports on the Edison/Mitofsky study on the highly unreliable exit polls of the 2004 elections. The main problem was in selecting which districts to poll but in the polls at each district not representing the votes at that…

“The table certainly challenges the idea that Kerry voters participated at a higher rate than Bush voters, but I am not sure it refutes it.”

There are various levels of proof and refutation. It seems that you are using a strict standard of what ‘refute’ means, perhaps it is a mathematical standard that you are presuming. Why? The Mitofsky report itself does not prove mathematically that its assertion of more Republicans refusing the poll created a great error in the polls. The Mitofsky report barely shows any evidence at all for this unmathematical claim. As far as proofs and refutations, your analysis, therefore, is on equal footing with the Mitofsky report.

Furthermore, certain items seem to cast a cloud of suspicion on the Mitofsky report. First, it claims to prove its conclusions, while its conclusions are very unproven by any professional standard. Second, the report came out the day before the Inauguration, as if to confirm its legitimacy. Certainly, it will be torn up, but it could not have been within a 24-hour period. Third, the news media jumped on the bandwagon, with all major networks reporting the report’s conclusion without any kind of analysis of the legitimacy of the conclusion. In other words, it would seem that perhaps US citizens are once again duped.

“Here’s why:

The table shows response rates for precincts not voters. Unfortunately, we have no way to tabulate response rates for individuals because we have no data for those who refused or were missed.”

There are other ways to estimate/get these numbers. Try this experiment. Take the ‘real’ vote counts to derive ‘real’ percentages per state of Kerry and Bush voters. Next, “filter out” the poll percents for each. What you have remaining should on average be the percent of Kerry voters and Bush voters in the Refusal Rates per state. Finally, see if these numbers make sense. There are several ways to do this. (a) Look at a distribution with mean and deviation. Is it normal or does it make sense? Are there outliers? (b) Try to fit it to a linear function of Rep rate to refusal rate. What’s the r-value? What kind of confidence is there? Are there outliers? Does this function provide a better explanation than the hypothesis that the exit poll data is correct? Is that true for all states or just some?

A few quick thoughts…

Luke asks why the “Mod Rep” precincts are so much more numerous (n=415) than the “Mod Dem” precincts (n=165). But I suspect it has something to do with why so many more counties on this map (http://www.usatoday.com/news/politicselections/vote2004/countymap.htm) are red. I suspect – though I don’t know for sure — that urban Democratic precincts tend to be a bit larger, and thus less numerous, than more rural Republican precincts. Remember that the eixt poll sample selection methodology takes precinct size into account.

Luke is right to warn readers to note the occasionally small subgroup sizes in the Edison/Mitofsky report. The average number of interviews per precinct was roughly 50, given the number of interviews and sampled precincts reported.

Consider a subgroup like “High Republican,” which has been focus of this post and the comments in this thread. It had only 40 precincts and thus represents roughly 2000 interviews. The sampling error reported by NEP (http://www.exit-poll.net/election-night/MethodsStatementStateGeneric.pdf) for a sample that size was +/- 4%, but remember that “within precinct error” (WPE) uses the margin between the candidates which combines two estimates (the Kerry and Bush vote), so the effective 95% confidence interval is between +/- 7% and 8% on the WPE (depending on your assumptions about the independence of the Bush and Kerry percentages).

Thus, while I have no quarrel with Bill Kaminsky’s algebra (above), his conclusion that “the differential response in highly Republican precincts does seem, prima facie, to be implausibly huge,” ignores the possibility that sampling error contributed to the WPE that subgroup. The “High Rep” precincts are only 9% of all precincts where George Bush won at least 60% of the vote (40 of 455 – p. 36). Throw in the potential for fuzziness in the non-response tallies kept by interviewers, and I remain skeptical that the data in this table “refutes” differential participation in the survey as a source of error.

I am hoping to post more on the main page today, but so far my day job is not cooperating…

It’s becoming more and more apparent that John Kerry was the actual winner of the election, but had it stolen by the Republicans, either through massive vote fraud or intimidation. What a sad day for our former democracy.

Supposing there is a response differential, and supposing it’s a compound artifact of voter affinity for pollsters and pollster affinity for voters … then it’s a damnably multivariate problem, and these quintile summaries don’t give us much to go on.

I am surprised by the lack of apparent correlation of refusal rate to Bush vote by precinct, but the extreme precincts are both sparse and nearly irrelevant to the aggregate error. [By way of boundary landmark references, note that a 100% Bush precinct — like a 100% Kerry precinct — would have no effect whatsoever on forecast error, no matter what the refusal rate.]

We need data points, not summaries, and even then it’s a highly occluded portrait.

What I want to see is a precinct by precinct list with the exit poll results (including age, sex, and race), voting results, voting results of precincts housed in the same building, pollster information (including source of recruitment), and method of voting (paper, punch, touch-screen). I believe that Conyers will get such data sooner or later, and I don’t want him interpreting it for America. It needs to be available to everyone – or, at the least, to experts across the political spectrum. That said, in the marketing world, there have been times that I had all of the data that I could dream of and still was not able to determine “what really happened.”

Are you people forgetting that the raw vote data per precinct has been available since November?

People at Berkeley did an analysis of the 2004 Florida data. Their conclusion was that there was a statistically significant relationship between some type of e-voting and Bush “over-votes.”

See the paper. This is real mathematics, by the way, not the bubble-gum analysis or summaries that we have seen so far:

http://verifiedvoting.org/downloads/election04_WP.pdf

Do you agree/disagree with the Berkeley numbers? Have you tried to reproduce them yourself?

You mathmatical wizards should also keep in mind that many Democratic voters — being less wealthy, more working class, many with two or more jobs — were much more likly to be in a hurry than Republican voters, who tend to be much better off financially, and are more likely to be in management positions at work. Hold down two or three jobs and take care of kids, see how much you rush around.

I don’t know how it impacts the calculations, but I know that many conservatives feel a patriotic duty to lie to pollsters (I recall Chicago columnist Mike Royko pushing that idea in the 70s or 80s and I know it caught on with many conservatives). The abusive behavior of many liberals in urban areas leaves many city conservatives in the closet and hiding what they really do in the voting booth.

“The abusive behavior of many liberals in urban areas leaves many city conservatives in the closet and hiding what they really do in the voting booth.”

To tell you the truth folks, I’m an in-the-closet conservative at school. VERY few classmates, and only one professor, know that I am a Republican. They know I have “conservative tendencies”, but I am pretty sure my 4.0 wouldn’t hold if I let every professor know I voted for George Bush and support most conservative causes. Academia can be HIGHLY intolerant of contrarian political points of view. Go figure…

It’s funny, I have to be very careful with my friends. It’s only after a few hints are dropped here and there that I will “come out” to a classmate that I suspect is a conservative. My “rep-adar” is pretty good and I haven’t outed myself to a liberal yet.

Very odd, but that’s my reality if I want to continue pursuing higher education.

My point – I don’t think liberals realize just how imposing and intolerant they can be when expressing their point of view (e.g., just read a DU post). I think the latest neo-conservative movement is a direct backlash to the media’s continued and sustained viscerally biased reporting.

Can we move past this in America? Well… The blogosphere is helping to bridge this gap. People of all poltiical backgrounds, faiths, and ideologies now have a venue to vent. Let our ideas live or die based on their merits – and merits alone – in this market place of ideas.

aaa, I just read your Freeman response links.

I wonder if Freeman has ever heard of the logical fallacies: Appeal to Authority and Appeal to the Galley. He did have some valid points in his criticism, but it’s curious how Freeman spent so much time building up his credentials.

Freeman admitted to me in a phone conversation quite a while back that he was NOT a quantitative researcher. He said that all he knows about stats is what he learned from a couple courses while working on his PhD.

Also, his references to the literature are pretty comical given that, from the one’s I located, they are not based on empirical research, but on observation and opinion.

ALL empirical research on exit polls that I have found (please show me otherwise) indicate persistent Democratic bias since the exit polls’ invention.

Perhaps someone should ask him why he insists on a single-tail test when his assumptions require a two-tail test?

Dr. Freeman is a curious personality. He’s also irrelevant.

Well, after hearing all these poor conservatives complain about how they are an abused minority I suppose I should throw in my own anecdote:

I am a mid-level manager in a large company. The 3 layers of mgmt above me are publicly-declared Republicans. Two of them are, by any measure, extremists. I don’t dare breathe a word of politics for fear of retribution (I’ve seen it happen). They assume I’m Republican because they assume all Democrats are like those portrayed in Mallard Fillmore.

Y’know guys, it goes both ways. And the reality is that although people on both sides will talk about not talking to pollsters or about lying to the pollsters, once they are approached their instincts take over. Unless they are shy or under time pressure, they likely will agree to fill in the form and will do so correctly. This is because most of us share a common human failing … we love to give our opinion.

Blame the college students

Mystery Pollster analyzes the official report of the Election Night exit-poll debacle; Mickey Kaus summarizes…

besides the exit polls are anonymous, its not like people can be outed via an exit poll. its a secret ballot.

Brian Dudley, there is interesting research on non-response. I’ve read that most non-respondents, if given the chance to do over again, would choose differently. The visceral reaction is hard to overcome in the moment. People may not know immediately that exit polls are anonymous. All they see is someone, presumably with a clipboard, and presumably with the markings of an organization (MSM), and they decide to just shake their head and walk on by. I did this all the time to people from CALPIRG, Sierra Club, ANSWER, etc. who stalked UCSD students as they walked between classes. I just didn’t want to be bothered and I certainly didn’t want to be bothered by those, what I consider to be “extremist” organizations.

Split-second decisions not participate in any survey could be motivated by a number of things that are very hard to measure.

Until this current commotion, I never knew that exit ballots were secret. However, if I am with my “Kerry” spouse and “secretly” voted for Bush, I can’t believe that spouse won’t sit with and see how I complete my exit ballot. Both my spouse and I were surprised that my brother-in-law and one of his best friends had decided long ago to lie – they don’t feel that any results should be valid except the “real” votes. Having a marketing background, understanding samples, and understanding all sorts of things that can happen as exit polls are leaked (reduced or increased turnout for either candidate), I would never lie to an exit pollster – but the fact that we are all reading this already says we are not typical.

Potentially relevant: there is a Pew research study a few years back comparing “immediate participants” to “reluctant participants” (I may have the exact terms wrong, as I’m writing this from memory).

That is, some telephone polls are “persistent” in that they call the same number several times over a multi-day period in hopes of getting a response. People who refuse to participate the first time they answer, but agree to participate in a follow-on call are “reluctant participants”.

This was relevant to the study because Pew found that overnight polls, which by definition only include “immediate participant”, were getting significantly different results than multi-day polls in certain electoral races. Specifically, this happened in races between a white Republican and a black Democrat.

The study was a detailed one. They asked the people their views on a wide range of topics. For a few topic areas they used differently phrased questions for different subsamples to see how phrasing could change the response (the resulting differences were sometimes dramatic).

But the biggest finding by far was that “reluctant participants” as a group held very negative views of African-Americans. This stood out in every question involving race.

And, yes, those people were heavily Republican.

“ALL empirical research on exit polls that I have found (please show me otherwise) indicate persistent Democratic bias since the exit polls’ invention.”

Even if there has always been a historical liberal bias in exit polls, the 2004 exit poll is still unusual when compared to previous exit polls, no? If anything, the 2004 poll numbers would reflect some unseen problem in addition to a historical bias, right?

Fran, “Having a marketing background, understanding samples, and understanding all sorts of things that can happen as exit polls are leaked (reduced or increased turnout for either candidate), I would never lie to an exit pollster”

Agreed. Having worked for on a political campaign that relies on accurate polls (talking pre-election polls), I am disturved to hear about purposeful disception in responding to polls. When my father-in-law told me that he had lied to Gallup, I was baffled (and look at the Colonel a bit cross-eyed).

Observer, very intersting. Do you have a link? Date? Title? Author? I’d love to read up on that Pew study.

Adan, you are correct. This year the exit poll was unusually biased. And from my point of view, there is definitely an “unseen” problem with this year’s exits when compared to previous years. Some say this is evidence of fraud, others say its training of pollsters and differential non-response.

I don’t really know, but the Mitofsky report, while it doesn’t answer all my questions (still haven’t had time to read it closely yet), is certainly better than Freeman or Simon/Baiman’s work. Light years better…

Here’s a test for folks like Simon/Baiman and Freeman: The data is supposed to be made available to the Roper Center shortly. Will Freeman and Simon/Baiman rework their analysis using these new data? Hopefully.

Will “real” statisticians produce higher quality and more relevant analysis of the data? Count on it.

The Pew study that “observer” refers to was conducted in 1997. The full report is at this link:

http://people-press.org/reports/print.php3?ReportID=94

It was updated last year:

http://people-press.org/reports/pdf/211.pdf

One comment: While the 1997 study did show the differences racial attitudes that Observer describes, the report does not suggest a Republican skew:

“There were no significant differences in the way respondents in the standard and rigorous samples described themselves politically, in their views about human nature, or in how well-informed they were about current events. The distribution of Democrats, Republicans and Independents was nearly identical in both samples. Both samples also included similar proportions of self-described liberals and conservatives.”

Thanks Mark – always on the ball!

As I understand it, your hypothesis is that Bush voters were shyer in Kerry states than in Bush states, while Kerry voters might be shyer in Bush states than in Kerry states, and that this would therefore tend to cancel out completion rate effects. However, I did what I think may be a test of this hypothesis, by testing for an interaction between the actual margin between the two candidates and completion rates in predicting the exit poll error. The reasoning is that in pro-Kerry states, more of the variance in completion rates would be accounted for by shy Bush voters than in pro-Bush states, where more of the variance in completion rates would be accounted for by shy Kerry voters. So the regression line between completion rate and exit poll error should be a function of Bush’s margin, i.e. there should be a significant interaction between margin. I omitted DC from the model as it was a clear outlier. The interaction term was not significant. RI was exerting more leverage than it should, so I re-ran the model with RI omitted. Again the interaction term was insignificant, although it was trending to significance (p=.08) and the sign of the regression coefficient was right for your hypothesis. So it could well be that a “variable shy voter” factor does contribute to some variance in the error rate, but the effect size would seem to be too small to account for much variance in

exit-poll error.

Febble, this would mean much more at the precinct level – many states have very red and very blue precincts. I am hoping that we all have access to the precinct level data when it is deposited at Roper.

Fran: agree, and there will be more power at precinct level. Even it is a small effect it may be a proxy for the same factor expressed as “lying”. In other words the proportion of non-responders from one camp or the other, may correlate with the proportion who respond but give the wrong answer. So it would be interesting (I think) to run this model on precinct level data.

Febble — The “Professor M” anecdota raises the unfortunate prospect that the protocal was not sufficiently drummed into exit poll workers, and consequently our completion rate data (and more importantly for this analysis, refusal rate data) is of such poor quality it may not be useful in resolving the response bias question.

Readers of this blog may want to read some commentary on LeftRight about the same topic.

One point:

Is it not possible to explain the overall response rate data without assumnig that the differential response rate is different across the country?

IE assume:

1. Bush/Red states have many more small towns/rural areas. In these areas, as opposed to New York or Los Angeles, the overall precinct level participation rate of **both** Bush and Kerry respondents is higher.

2. The differential between Bush/Kerry responses may be the same acoss the country – ie 6% everywhere – and still be consistent with this data, no?

This seems obvious to me. Has this been pointed out before?

3. Now, where does the 6% error come from? Probably some combination of reticence and/or misrepresentation due to embarassment for voting for Bush, as the highly extreme and vocal anti-Bush movment has made it difficult to be vocally pro-Bush.

Explaining the Exit Polls

Left2Right does a lengthy job doing what most media is trying to do, understand what went wrong with the exit polls.

Rick Brady says, “the Mitofsky report, while it doesn’t answer all my questions (still haven’t had time to read it closely yet), is certainly better than Freeman or Simon/Baiman’s work. Light years better…”

No, it is not. I have read it completely and I am a mathematician. I’ve said it before and I’ll say it again, the Mitofsky report gives absolutely no proof of its baseless assertion.

The report was timed to be placed into mainstream media right before Bush’s Inaugural. No mathematicians such as myself had time to rigorously refute it within a day and contact major news media to refute it. However, statisticians have now had time to gather up and offer their criticism:

http://uscountvotes.org/ucvAnalysis/US/USCountVotes_Re_Mitofsky-Edison.pdf

Rick Brady, after you finally read the Mitofsky report maybe you could comment on why it is valid. Maybe you could stop attacking liberals on this web page. I guess the best defense is a good offense, right? Can you just stop the slander and stick to facts?

Don, thanks for the rebuke. I do need it from time to time. I have read the Mitofsky report since my last comment and indeed I find that it is not entirely explanatory. However, I cannot disprove their report from the data that I’ve seen. I can say that some of their assertions are not completely supported by the report.

I can though demonstrate the numerous flaws in the logic and statistics behind Freeman’s paper and I have undermined the Simon/Baiman paper on the same grounds. No one has yet challenged my critique of the Simon/Baiman paper, although it has yet to be widely circulated for review.

I have a post forthcoming that details the numerous problems with the work done by Freeman to date. If time permits, I should have the exhaustive Freeman critique done by week’s end. Since you are a mathematician, I’m sure you will appreciate it (although, what I present is largely based on logic with a little statistics, so non mathematicians should appreciate it as well).

Hope that my work will get some wider attention, but that is up to those with more power in this ‘sphere than myself. Nevertheless, if you send me an e-mail, I’ll send you a link to my work when it’s complete (or you can review my critique of the Simon/Baiman paper now if you’d like).

Don, one more thing. After reading the EMR report more thoroughly, I still stand by my statement “..the Mitofsky report, while it doesn’t answer all my questions (still haven’t had time to read it closely yet), is certainly better than Freeman or Simon/Baiman’s work. Light years better…”

Have you read the Freeman and Simon/Baiman reports? How does Freeman justify use of a single-tail test when his null hypothesis requires a two-tail test? How does Freeman divine a digit significant to a 10th from an extrapolation of a whole digit? Why does Freeman not look at the magnitude of errors in New England states like New York, Vermont, Rhode Island, and New Hampshire where the error was much larger than in his battleground states? These points are empirically demonstrable.

His quantitative errors are understanding (he told me on the phone that he is a qualitative researcher and that the only stats he knows is from a couple of courses he took while working on his PhD), but his supression of evidence and contratradictory presentation of the literature is simply astounding for an MIT PhD.

Why does Freeman exclude analysis of the 1992 exit polls (Mitofsky and Edelman, 1995), which showed Democratic bias in 1992 and “the last two elections.” Why does Freeman use the BYU exit poll of Utah to demonstrate how accurate exit polls are in general when the NEP poll of Utah was off by a couple of percentage points? Why also does he withold information about the German polls cited in his paper, information that Mark Blumenthal tracked down? Mark’s had the information well before Freeman finalized his paper (and I know he reads this blog). That is either supression of evidence or sloppy research – or worse – both.

And funny thing about the Ukranian exit polls: A simple analysis of confidence intervals and sample size reveals that the Ukranian exits were had a larger design effect than the US exit polls.

These are the principle points of my paper. When you take it all together, Freeman’s paper is terrible. Doesn’t even come close the the level of detail presented in the EMR paper; although, this does not mean that the EMR paper has proven their case – that is something entirely different.

Rick, previously, I asked you “..after you finally read the Mitofsky report maybe you could comment on why it is valid…”

You have answered me with the following:

(1) Offensive strategy. You supply detailed

criticisms of Freeman and also Simon/Baiman.

(2) Defensive strategy (generalizations and evasions):

(a) “…it is not entirely explanatory. However, I cannot disprove their report from the data that I’ve seen. I can say that some of their assertions are not completely supported by the report.”

(b) “…this does not mean that the EMR paper has proven their case – that is something entirely different.”

Edison/Mitofsky not only gave no 2004 data which supported their assertions, but the 2004 summary data and other 2004 charts given suggest the opposite of their conclusion.

Let us assume that all your claims about Freeman and also Simon/Baiman are true. Then, even in that case, it is not fair to say that the EMR is “light years better” than some other bad reports.

Don, I know the Freeman and Simon/Baiman papers are bad. I don’t know that the Mitofsky paper is bad, just that it’s not falsifiable (i.e., I can’t reproduce their analysis). There is a huge difference.

I tend trust decades of experience, but accept that others may not. I don’t believe that EMR are BSing, I just think they are a bit sloppy and self-righteous in their presentation. They could do us all a favor by making it possible to reproduce their analysis. That’s too bad on their part (actually, I’m not entirely convinced yet that their work cannot be reproduced, but have not had time to REALLY look at the UMich data).

So, you’ve got me to concede. It’s not light years better. But, on a scale of 0-10, the Freeman and Simon/Baiman papers are clearly “0.” The EMR paper is about a 5 because they provide a tremendous amount of new data and insight into methods and data collection procedures. I don’t think that they are involved in some grand conspiracy here. I have to believe that they have empirically substantiated their claims – only have not bothered to make that detailed analysis public. I’ve asked them to do so and I’m sure others have as well.

Do you think EMR are partners in a Bush conspiracy? Halliburton payoff somewhere? What are you implying?

Rick Brady: “I tend trust decades of experience, but accept that others may not.”

I agree, because I also have that tendency to trust profesionals. However, in this case, the analysis is so bad as to negate the validity of its conclusion. That does not mean that one should believe the opposite conclusion, but instead be open-minded. That is what statisticians are doing. USCountVotes.org, for example, states that election fraud should be one of a couple of viable hypotheses.

In addition to the summary table posted by Blumenthal that has the appearance of refuting the EMR conclusion, there is also the issue of the WPE chart in their paper. That chart shows that hi-tech voting had a hugely significant difference in WPE from the WPE of normal voting. The EMR conclusion lacks any kind of explaining power for this fact. Moreover, the EMR asserts (not proves) that there was no such difference between hi-tech voting and normal voting. Because the validity of this conclusion is also negated by the very data in the paper, I choose to be open-minded about the possibility that some hi-tech voting could have been fraudulent.

Rick Brady: “They could do us all a favor by making it possible to reproduce their analysis.”

I agree.

Rick Brady: “Do you think EMR are partners in a Bush conspiracy?”

I choose not to have blind faith in the EMR paper, especially because there are problems with it. I believe in the power of science and mathematics. As other scientifically-minded individuals believe, I think that fraud is a viable hypothesis still.

As far as a “Bush conspiracy,” the burden of proof is not on me to show anything. EMR is the one making a gazillion dollars from their assertions. They should at least provide some evidence for them. As you have asked for, they should have posted their raw exit poll data. In fact, it seems that they would most likely have it in some standard postable format already, if they can do global computations on the data to get their conclusions and summary charts. So where is it?

Don, I agree that EMR should do more, but why fall on the sword when the data may actually show something more devious?

you wrote: “That chart shows that hi-tech voting had a hugely significant difference in WPE from the WPE of normal voting.” Are you sure? Sure the paper ballot was the lowest overall, but what about mechanical vote? I believe it was the highest. I don’t have the report in front of me, so set me straight; isn’t mechanical voting the old fashioned pull levers? Also, how do you explain the fact that the discrepancy by vote method virtually evaporates when the data are isolated by urban and rural? (near perfect example of Simpson’s Paradox) I don’t know – am I reading that right?

Has anyone addressed the apparent finding by William Kaminsky (as reported by Simon and Baiman, p. 11) that “in 22 of 23 states which break down their voter registrations by party ID the ratio of registered Republicans to registered Democrats in the final, adjusted exit poll was larger than the ratio of registered Republicans to registered Democrats on the official

registration rolls”? Isn’t this inconsistent with the “Reluctant Bush Responder” hypothesis?

Have you seen this before? It’s a number guessing game: http://www.amblesideprimary.com/ambleweb/mentalmaths/guessthenumber.html. I guessed 47708, and it got it right! Pretty neat.

Have you seen this before? It’s a number guessing game: http://www.amblesideprimary.com/ambleweb/mentalmaths/guessthenumber.html. I guessed 47708, and it got it right! Pretty neat.