Last week, this site quietly passed the milestone of one million page views, as tracked by Sitemeter. Now as MP will be the first to note, the “page views” statistic may tell us more about the mechanical function of a site than about the number of individuals that used it. Also, the most popular blogs hit that milestone every week. Some can do it in a single day. Nonetheless, a million page views still seems noteworthy for special interest blog with an admittedly narrow focus.

All of this reminds me of the uncertainty I felt about a year ago in the aftermath of the 2004 election, wondering whether I could find enough worthy topics to sustain a blog focused on political polling. Granted, this last week has not been typical given the off-year elections in a handful of states, but the sheer volume of Mystery-Pollster-worthy topics I stumbled on is remarkable.

First, there were the stories updated at the end of last week, the surveys on the California ballot propositions, the stunning miss by the Columbus Dispatch poll and release of two election day surveys in New Jersey and New York City. (Incidentally, in describing their methodologies as “very similar” I should have noted one key difference: The AP-IPSOS survey of New Jersey voters was based on a random digit dial [RDD] sample, while the Pace University survey of NYC voters sampled from a list of registered voters).

But then there were the stories I missed:

Detroit – Just before the mayoral runoff election in Detroit last week, four public polls had challenger Freman Hendrix running ahead of incumbent Kwame Kilpatrick by margins ranging from 7 to 21 percentage points. On Election Day, two television stations released election day telephone surveys billed as “exit polls”** that initially put Hendrix ahead by margins of 6 and 12 percentage points. One station (WDIV) declared Hendrix the winner.

However, when all the votes were counted, Kilpatrick came out ahead by a six-point margin (53% to 47%). This lead to speculation about either the demise of telephone polling or the possibility of vote fraud (which was not an entirely unthinkable notion given an ongoing FBI investigation into allegations of absentee voter fraud in Detroit). The one prognosticator who got it right used an old fashioned “key precinct” analysis that looked at actual results from a sampling of precincts rather than a survey of voters.

Measuring Poll Accuracy – Poli-Sci Prof. Charles Franklin posted thoughts and data showing a different way of measuring “accuracy” in the context of the California proposition polling. His point – one that MP does not quarrel with – is to focus not on the average error but on the “spread” of the errors. Money quote:

The bottom line of the California proposition polling is that the variability amounted to saying the polls “knew” the outcome, in a range of some 9.6% for “yes” and 8.55% for “no”. While the former easily covers the outcome, the latter only just barely covers the no vote outcome. And it raises the question of how much is it worth to have confidence in an outcome that can range over 9 to 10%?

Franklin also points out an important characteristic of the “Mosteller” accuracy measures I hastily cobbled together last week:

MysteryPollster calculates the errors using the “Mosteller methods” that allocate undecideds either proportionately or equally. That is standard in the polling profession, but ignores the fact that pollsters rarely adopt either of these approaches in the published results. I may post a rant against this approach some other day, but for now will only say that if pollsters won’t publish these estimates, we should just stick to what they do publish– the percentages for yes and no, without allocating undecideds

For the record: I have no great attachment to any particular method of quantifying poll accuracy (there are many), but Franklin’s point is valid. The notion of “accuracy” in polling is more than quick computation and is very worthy of more considered discussion.

More on California – Democratic Pollsters Mark Mellman and Doug Usher, who conducted internal surveys for the “No” campaigns against Propositions 74 and 76, summarized the lessons they learned for National Journal’s Hotline (subscription required).

It is critical to test actual ballot language, rather than general concepts or initiative titles. It is tempting to test ‘simplified’ initiative descriptions, under the assumption that voters do not read the ballot, and instead vote on pre-conceived notions of each initiative. That is a mistake. Proper initiative wording — in combination with a properly constructed sample that realistically reflects the potential electorate — are necessary conditions for understanding public opinion on ballot initiatives. Pollsters who deviated from those parameters got it wrong.

Arkansas and the Zogby “Interactive” Surveys – A survey of Arkansas voters conducted using an internet based “panel” yielded very different results from a telephone survey conducted using conventional random sampling. Zogby had GOP Senator Asa Hutchinson leading Democrat Attorney General Mike Beebe by ten points in the race for Governor (49% to 39%), while a University of Arkansas Poll had Beebe ahead by a nearly opposite margin (46% to 35%). The difference led to an in-depth (subscription only) look at Internet polling by the Arkansas Democrat-Gazette (also reproduced here) and a release by Zogby that provides more explanation of their methodology (hat tip: Hotline).

The “Generic” Congressional Vote – Roll Call‘s Stuart Rothenberg found a “clear lesson” after looking at how the “generic” congressional vote questions did at forecasting the outcome in 1994 (here by subscription or here on Rothenberg’s site).

When it comes to the question of whether voters believe their own House Member deserves re-election, Republicans are in no better shape now than Democrats were at the same time during the 1993-1994 election cycle.

Rothenberg also looked at how different question wording in the so-called generic vote can make for different results. (Yes, Rothenberg’s column appeared three weeks ago, but MP learned of it over the weekend in this item by the New Republic’s Michael Crowly posted on TNR’s new blog, The Plank).

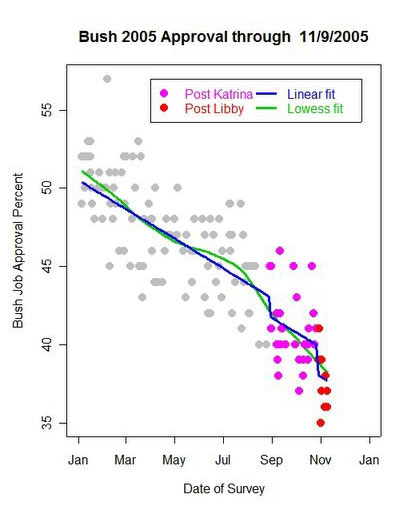

Bush’s Job Rating – Finally, all of these stories came during a ten day period that also saw new poll releases from Newsweek, Fox News, AP-IPSOS, NBC News/Wall Street Journal, the Pew Research Center, ABC News/Washington Post and Zogby. [Forgot CBS News]. Virtually all showed new lows in the job rating of President George W. Bush. As always, Franklin’s Political Arithmetik provides the killer graph:

In another post, Franklin (who was busy last week) uses Gallup data to create job approval charts for twelve different presidents that each share an identical (and therefore comparable) graphic perspective. His conclusion:

President George W. Bush’s decline more closely resembles the long-term decline of Jimmy Carter’s approval than it does the free fall of either the elder President Bush or President Nixon.

Elsewhere, some who follow the Rassmussen automated poll thought they saw some relief for the President in a small uptick last week. Others were dubious. A look at today’s Rasmussen numbers confirms the instincts of the skeptics. Last weeks’ up-tick has vanished.

All of these are topics worthy of more discussion. My cup runneth over.

**MP believes the term “exit poll” should apply only to surveys conducted by intercepting random voters as they leave the polling place, although that distinction may blur as exit pollsters are forced to rely on telephone surveys to reach the rapidly growing number that vote by mail.

Great post! It’s been a while indeed.

You mention the Mosteller Measures… Martin/Traugott/Kennedy’s (MTK) measure was published recently in POQ. They take the natural log of an odds ratio that is algebraically equivalent to Liddle’s ln(alpha).

Also, the folks at Survey USA proposed a method in Miami that rewards pollsters for allocating undecideds. I think Jay Leve was one of the co-authors. I can’t seem to find that paper… Drats.

BTW – I think you will have something published soon that you can point to retract your mea culpa. Sampling error wreaks havoc on exit poll analyses in highly partisan precincts with small sample sizes.

with regards to the Columbus Dispatch poll,

when do you look at a poll result versus an election result and suspect election fraud. Obviously a poll cannot prove such a thing but it would seem to me that in cases of a well established polling method giving ridiculously different results from the election count in a state that has had election fraud issues and in a vote that was billed as an attempt to reform corrupt election methodologies that the specter of fraud has to come up.

Are there any pollster metrics used to indicate when the fault may be with the election and not the poll?

Today’s Talking Points

A quick roundup of a few more interesting items: According to Insight magazine, President Bush “feels betrayed by several of his most senior aides and advisors and has severely restricted access to the Oval Office… Bush has also reduced contact with …

Today’s Talking Points

A quick roundup of a few interesting items: According to Insight magazine, President Bush “feels betrayed by several of his most senior aides and advisors and has severely restricted access to the Oval Office… Bush has also reduced contact with his f…

Today’s Talking Points

A quick roundup of a few interesting items: According to Insight magazine, President Bush “feels betrayed by several of his most senior aides and advisors and has severely restricted access to the Oval Office… Bush has also reduced contact with his f…

Today’s Talking Points

A quick roundup of a few interesting items: According to Insight magazine, President Bush “feels betrayed by several of his most senior aides and advisors and has severely restricted access to the Oval Office… Bush has also reduced contact with his f…

Rick,

To which Mark mea culpa are you refering? On what issue?

Tialoc,

I second your questions on the Ohio referendum results. I do recall that Mark wrote that the Mail Dispatch Poll was known to have a much larger average error for statewide referenda than for office holder elections.

But I wanted to share one thing with you. Now, I really shouldn’t speak for Mark. But I can imagine that Mark may not want to open that can of worms, unfortunately. And your questions are BIG questions going to the heart of election policy in America, IMHO.

Anyway, I think Mark’s felt, rightly or wrongly, that discussions about exit poll vs election result discrepancies (read exit poll bias in 2004) have distracted his energies from polling matters. Like those he listed in this post instead of talking about Ohio! I hope I’m wrong and he’ll share his thoughts on the Ohio referendum question at some point. But I can understand his being gun shy, if in fact he’s not planning to write on Ohio right now.

In November 2004 Mark was the ONLY qualified person explaing exit polls to the public. Even if not everyone believes the explanations he has come to accept on the exit poll errors, he made what I felt was a heroic effort to stoke and continue the public dialog on getting to the bottom of that controversial question. I honestly don’t think we would have gotten the answers and honest attempts to find those answers that we did get without this blog. But, I think Mark has said that after awhile that dialog was not what he wanted to spend his energies on.

Anyway, that’s just my take. I hope I’m not offending Mark by sort of putting words in his mouth, as his blog did a HUGE service in 2004 on the exit poll debate. I just hope he will still lend his reasoned voice to these controversies.

Alex in Los Angeles