So admit it. At least once a day, possibly more often, you’ve been checking in on the various rolling-average tracking surveys. Most of us are.

If you have been looking at more than one, the results over the last few days may have seemed a bit confusing. Consider:

- As of last night, the ABC News/Washington Post poll agreed with itself and reported John Kerry moving point ahead of George Bush (49% to 48%), a three-point increase for Kerry since Friday.

- Meanwhile The Reuters/Zogby poll had President Bush “expanding his lead to three points nationwide,” growing his support from one point to three points (48% to 45%) in four days.

- But wait! The Rassmussen poll reported yesterday that John Kerry now leads by two points (48% to 46%), “the first time Senator Kerry has held the lead since August 23.”

- But there’s more! The TIPP poll had Bush moving ahead of Kerry by eight points (50% to 42%) after having led by only a single point (47% to 46%) four days earlier.

What in the world is going on here?

Two words: Sampling Error.

Try this experiment. Copy all of the results from any one of these surveys for the last two weeks into a spreadsheet. Calculate the average overall result for each candidate. Now check to see if any result for any candidate on any day falls outside of the reported margin of error for that survey for either candidate. I have — for all four surveys — and I see no result beyond the margin of error.

What about the differences between two surveys? Two results at the extreme ends of the error range can still be significant. A different statistical test (the Z-Test of Independence) checks for such a difference.

So what about the difference between the 46% that the TIPP survey reported four days ago and the 42% they reported yesterday? It is close, but still not significant at a 95% confidence level

What about the three-point increase for John Kerry (from 46% to 49%) over the last three days on the that ABC/Washington Post survey? Nope, not significant either. And keep in mind, they had Kerry at 48% before, just seven days earlier.

Might some of these differences look “significant” if we relaxed the level of confidence to 90% or lower? Yes, but that means that we would likely find more differences by chance alone. If you hunt though all the daily results for all the tracking surveys, you will probably find a significant difference in there somewhere (some call that “data mining”). However, you would need to consider the following: Calculate the number of potential pairs of differences we could check given 4 surveys and 13 releases each (2 less for ABC/Washington Post) over the last 14 days (I won’t, but it’s a big number). At a 95% confidence level, you should find one apparently “significant” difference for every 20 pairs you test. Relax the significance level to 90%, and you will see “significant” yet meaningless differences for one comparison in ten.

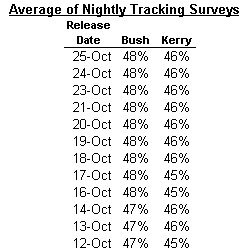

If all of this is just too confusing, consider the following. I averaged the daily results released by all four surveys for the last two weeks (excluding October 15 and 22, when ABC/Washington Post did not release data). Here is the “trend” I get:

If all four surveys — or even three of the four — were showing small, non-significant changes in the same direction, I would be less skeptical. We could be more confident that the effectively larger sample of four combined surveys might make a small change significant. However, when the individual surveys show non-significant changes that zig and zag (or Zog?) in opposite directions, the odds are high that all of this is just random variation.

Disclaimer (since someone always asks): Yes, these are four different surveys involving different questions, sample sizes and sampling methodologies (although their methods are constant from night to night). One (Rasmussen) asks questions using an automated recording rather than interviewers. All four weight by party identification, though to different targets and some more consistently than others. So the rule book says, we should not simply combine them. Also, averaging the four does not yield a more accurate model of the likely electorate than any one alone. My point is simply that averaging the four surveys demonstrates that much of the apparent variation over the last two weeks is random noise.

Also, as with investing, past performance is no guarantee of future gain (or loss). Just because things look stable over the last two weeks doesn’t mean they wont change tomorrow.

So we’ll just have to keep checking obsessively…and remembering sampling error.

UPDATE: Prof. Alan Abramowitz makes a very similar point over at Ruy Teixeira’s Donkey Rising.

Correction: The original version of this post wrongly identified the TIPP survey as “IBD/TIPP.” While TIPP did surveys in partnership with Investor’s Business Daily earlier in the year, the current tracking poll is just done by TIPP. My bad – sorry for the error.

Great post.

We imbue numbers with such factual certainty that we forget they are only a representation of an operationally-defined, underlying concept which in itself could be flawed – in this case, “likely voters”.

Nevermind the the confidence interval holds that 1 in 20 polls will be outside the MoE – by any possible margin!

Wow. That chart you created showing a consistent 2-point advantage for Bush, and near-identical numbers from beginning to end, puts it all in perspective. It’s all meaningless at this point (which didn’t stop Slate from trumpeting one day when Kerry was ahead on its main headline area, then relegating Bush’s return to the top a day later to the sidebar).

It seems clear to me that the election is so close that it will be decided by one– or several– of these oddball interpretation factors– youth registration, undecideds breaking for the incumbent or the challenger, a rise in black support for Bush, randomness in the electoral college, etc. The problem is, NO ONE can know at this point which of those factors is valid and which is total bull– in this particular election with these particular candidates. You pick which ones you believe have validity based on which ones support your prejudices (thus Andrew Sullivan can’t imagine Bush winning now, because he assumes every undecided will come down where he came down on Bush).

Or as William Goldman said of Hollywood, the only rule you can count on is, nobody knows anything.

I think we are all having trouble seeing the wood from the trees with regard to the sheer volume of polling data in the run up to this election. I think what is going to ultimately decided this election is the percentage increase of voter turnout vis a vis the last election. I think that if there is circa 5 million additional voters in this election Senator Kerry will win by a small but comfortable margin. Do any of the polls reflect the anticipated increase in voter turnout for this election?

But G– increased voter turnout only works for Kerry if you assume that it’s young people who will vote Democratic because MTV tells them to (never mind that young people seem to be more pro-Bush than the average voter), say, and not the “missing evangelicals” who will show up this time. The fact is, with half of eligible people not voting, there’s lots of theoretical groups to found in that body who COULD turn the election– but little concrete evidence to say any one group actually will turn out.

When you look at the tracking polls, it looks like there’s an INVERSE correlation between TIPP and Zogby on the one hand, and WaPo and Rasmussen on the other. Is it possible that their likely voter screens measure different things? Might one set of polls weigh self-described intensity more heavily than voting history, for instance, so that as a group becomes more intense they get counted in one set of polls, while they are still excluded from the other set of polls? I have no way of verifying this, but the inverse correlation effect does seem to be fairly strong.

Mike G: Isn’t it sort of a rule of thumb that (in general) the Republicans want low turnout, and the Democrats want high turnout?

I’ve heard this on news programs, not only this year but in past years, and if you think about it in terms of traditional non-voters it makes sense. “Missing evangelicals” aside, most of the traditional non-voting groups would probably lean Democratic, wouldn’t they?

And, without giving validity to, or supporting, any of the conspiracy theories, it seems like most of the voter suppression theories are targeting the Republicans as the likely culprits. I haven’t seen to many Dems accused of voter suppression schemes. If you reason backwards, you could reason that if there’s a nugget of truth to any of them, it’s because Republicans feel low turnout is to their advantage and high turnout is to the Dems advantage.

This post is right on. I can’t stand the way the polls are taken at face value wrt miniscule ‘leads’ – on Foxnews, for instance, they were averaging a bunch of polls in one race and saying they showed Salazar “ahead by 0.6%”. As anyone who knows anything about statistics is aware, this is 100% meaningless. To demonstrate I wrote a computer program that randomly assigned ‘candidate preferences’ to 50000 ‘voters’, and then ran ten random polls of 1000 voters each, and compared it to the actual proportions in the population. Here are the results, which show how far off even a perfectly conducted poll can be, and how COMPLETELY meaningless small percentage ‘leads’ are:

Poll # 1

Commander Chimp – 43.7

Senator Ketchup – 47.0

Undecided – 9.3

Poll # 2

Commander Chimp – 44.8

Senator Ketchup – 45.7

Undecided – 9.5

Poll # 3

Commander Chimp – 44.5

Senator Ketchup – 45.2

Undecided – 10.3

Poll # 4

Commander Chimp – 44.5

Senator Ketchup – 45.7

Undecided – 9.8

Poll # 5

Commander Chimp – 45.6

Senator Ketchup – 44.8

Undecided – 9.6

Poll # 6

Commander Chimp – 44.4

Senator Ketchup – 45.4

Undecided – 10.2

Poll # 7

Commander Chimp – 46.0

Senator Ketchup – 46.1

Undecided – 7.9

Poll # 8

Commander Chimp – 46.0

Senator Ketchup – 44.5

Undecided – 9.5

Poll # 9

Commander Chimp – 42.9

Senator Ketchup – 47.7

Undecided – 9.4

Poll # 10

Commander Chimp – 47.8

Senator Ketchup – 42.3

Undecided – 9.9

Actual:

Commander Chimp – 44.938

Senator Ketchup – 45.086

Undecided – 9.976

Anthony– That’s my point, as it has been Mystery Pollster’s about cellphones for instance, the rules of thumb are of dubious validity this time out. One rule of thumb is that the young are liberal. Consequently, get them out in higher numbers and they help Kerry. Except, what do you know, liberal young people, who favor gay marriage and drug legalization and so on, turn out to support Bush on the war on terror. Likewise the cell phone theory– folks with no land line may be young technoloberals, unless they’re all young technolibertarians who read Little Green Footballs, and aren’t necessarily young in the first place, either. We just don’t know, 9-11 may have scrambled the playing field and invalidated a number of theories, but the actual evidence for that won’t arrive until the election.

That Republicans may be encouraging staying home, and Democrats are encouraging turn out, may simply demonstrate that both are still fighting the last election, which is still the most logical thing to do, but has an unusually high chance of being unsuccessful this time.

Mike G: I respectfully disagree. The concept that in THIS election, the rules of thumb are of dubious validity, is to me an unproven statement that relies more on hope than on fact.

I don’t believe that a majority of young voters support Bush on the war on terror. I have never seen a poll or survey that showed this.

In contrast, I have seen recent polls of self-described “cellular only” voters who went for Kerry by a statistically significant margin. One number I remember from just yesterday was an 18% margin for Kerry in an ABC poll.

I would ask you this: if the low turnout/high turnout strategies are a thing of the past, why are the campaigns still (apparently) pursuing the same strategies? These men and women get paid a lot of money to get it right, and I can’t believe that in the aggregate they would risk a campaign of any size (let alone the Presidency) on an unfounded or outdated theory. In other words: why might you be right and all the high-priced strategists wrong?

Looked at from the journalists’ perspective, there is no virtue in unchanging polls. Where’s the story?

What the story seems to be is that Bush has a small lead and has had that lead since September. It ebbs and flows, but, on the popular vote it seems constant.

In the battleground states the picture is more complicated because a lot of the polling is using smaller samples which increase the margin of error and decrease the confidence interval.

As this really all comes down to the EC the real question is voting efficiency: if Kerry is a) behind nationally by a couple of points and, b) is going to run up big majorities in a number of large states, his voting efficiency is low and the election is Bush’s.

In the perfect storm a candidate wins enough states to lock up the EC and wins them by a tiny percentage of the vote. Thus every vote is efficient for that winning candidate.

re: G, Mike G, and Anthony:

I thought that polls had shown newly registered voters were far more likely to be Democrats, and that’s why Dems are trying to encourage voter turnout and Repubs are allegedly trying to suppress it.

Re: the tracking polls. Mystery Pollster, I found your site a week ago, I wish I’d known about you sooner! Your articles are so refreshing–actual INFORMATION and not just meanless snippets! I’ve always wondered about what they mean when they dismissively talk about “the margin of error”, and now I finally have an idea.

I have to say that it’s sites like this that are making the web the new and far improved source of news.

So anyway, praiseful banter…Re: cell phone theory discussion. There’s got to be some kind of democratic advantage to my mind. Cell phones are more prevalent in more densely populated areas, which are heavily democratic. Out in the sticks, bush, at least, if not the reps, are the ones whose message is resonating.

Add more people to an area, and suddenly everyone’s voting for kerry/gore, go figure. Maybe Mystery Pollster can do a column on this phenomenon? Why why why?

Interesting short piece on polling from a Republican pollster.

http://www.cbsnews.com/stories/2004/10/26/opinion/main651508.shtml

This reinforces what Mystery Pollster has been saying.

So, what do you think of Sam Wang’s meta-analysis of the polls at:

http://synapse.princeton.edu/~sam/pollcalc.html

And as we’ve learned, national tracking polls are meaningless because this all comes down to a handful of states and the electoral college.

Any ideas as to why those daily averages are so stable? Are few people changing their minds? Are people changing their minds randomly so that the changes cancel out? Are the numbers misleading and/or inaccurate? Or something else?

Mike G,

If Truth is the first casualty of War then I believe facts are probably the first Casualty of an election campaign.I agree with you about making the assumption that young people will vote in larger numbers for Kerry. I accept that different “Facts” paint different pictures in this campaign in particular. However I also believe there is a big, increasing and disenfranchised ‘Underclass'(for want of better word) who will show up and vote in this election for the first time. And Vote for Kerry.These are the people who are always overlooked in our society, who don’t have cell phones or Internet access, who aren’t traditionally targetted by pollsters.These people are bonded not by race, colour or creed but by increasing poverty. I believe that they understand how critical a time this is for them to make to stand up for themselves. And again I believe that they will vote in larger numbers for Kerry.

I’m going on my gut feeling here because after the last 3 weeks of tracking opinions etc. I’m polled out!. I’m predicting a turnout over 55%, What Bush wouldn’t give for that number to appear in a poll!

Looking over these posts, it’s pretty clear that few people took a statistics class, or least remember any of it.

First, there are two kinds of error in polling sampling: one is a plus/minus on the questions (+/- 3 or 4% on a binary question), and the other is a 1 in 20 chance that the survey sample simply bears no resemblance to the universe from which the sample is drawn.

In other words, there’s a 5% chance that your robo-dialing machines called a hugely disproportionate group of whatevers among its 600 surveys, and that your results should be flushed down the toilet. That’s true up to sample sizes of 2,000 to 3,000 interviews, and doesn’t decrease that rapidly as sample size increases.

One oddity of statistical analysis is that the size of the universe is generally unimportant past a certain N size. So the state polls, as long as they have 400+ respondents, are not generally less accurate (and likely more so) than national surveys.

Looking at the national polling numbers is, by the simplest of notions, nothing more than idle chatter. Why? Winning the popular vote doesn’t get you a cup of coffee. (Right, Senator Gore?)

Looking at the state polls in aggregate will give you a very good idea of what’s going to happen. If you have an irrational fear of numbers (you shouldn’t be this far down this thread, for one), just read where the campaigns are directing their resources over these last few days. That will tell you what’s in play and what isn’t.

Ohio: no

Iowa: yes

Florida: yes

New Hampshire: no

Wisconsin:yes

Pennsylvania: no

So glad that I stumbled onto this site. Johnny O has it down pat. The potential for sampling errors in statewide polls is so great as to make the “results” of said polling nothing more than TV fodder. Further on robo-polling, who listens to the machine and answers those questions anyway??? PLEA FOR SANITY: Please let this election be over soon.

Electoral College Update 33

Bush 290, Kerry 248 electoral votes. In this latest projection, based on averages of all state-by-state polls within the last 14 days, Hawaii, Minnesota, New Mexico, and Wisconsin have shifted to Bush since last week.